OpenAI Spring Update

GET THE #1 EMAIL FOR EXECUTIVES

Subscribe to get the weekly email newsletter loved by 1000+ executives. It's FREE!

GPT-4o

What is it?

"o" is for "omni". Representing this "omni"-modal approach, text, audio, image, etc.

Response times for these other modalities are greatly improved in terms of speed.

- 2x faster than GPT-4 Turbo

- 50% cheaper token usage through the API

- 5x higher rate limits

Basically, you are able to communicate by voice back and forth from the model without much delay. In fact the response times are equivalent to human response times.

I'm sure if you have seen Marques Brownlee's review of the Rabbit R1 or AI pin you will understand why this is an important step.

Also importantly you can interrupt the replies, this is a good quality of life feature.

Emotion is detected through the users voice and recognised through the model, this is interesting.

I'm interested in how this is represented within the model; does emotion represent it's own seperate parameter space or do words spoken differently each represent their own space? I assume something like whispers architecture is used to extract text from audio or I could be completely wrong and this whole step is within the hidden body of the model.

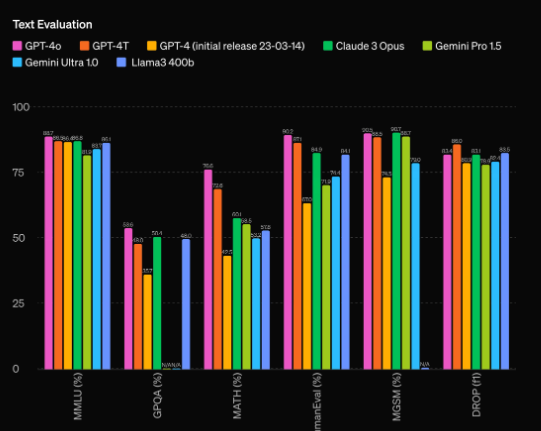

The new model sports all the new capabilities while also being slightly better than GPT-4 Turbo for text.

Great!

That's all for voice, definitely the important new modality for using gpt models, but any improvements on video?

Yes, vision capabilities are mentioned to of been improved, I believe this is mostly around recognition of live video feeds which further adds to the quality of life improvements.

Definitely, this is the correct delivery of what those AI pin / Rabbit M1s were trying to achieve.

Screensharing with the GPT model while live coding? This seems like a very interesting step, side-stepping the requirements of interacting with the IDE and directly going by vision.

But I would think the more important application of this will be with all the other applications? emails, websites, figma, etc? It's just a realtime assistant in any workspace?

This is only available through the desktop app. Where can I download this?...

Overall a lot of usability steps, interested to see how far this can be taken for users.